In this tutorial, you will learn what data leakage is and how to prevent it. If you don't know how to prevent it, leakage will come up frequently, and it will ruin your models in subtle and dangerous ways. So, this is one of the most important concepts for practicing data scientists.

在本教程中,您将了解什么是数据泄露以及如何防止它。 如果您不知道如何预防,泄漏就会频繁发生,并且会以微妙而危险的方式毁掉您的模型。 因此,这是数据科学家实践中最重要的概念之一。

Introduction

介绍

Data leakage (or leakage) happens when your training data contains information about the target, but similar data will not be available when the model is used for prediction. This leads to high performance on the training set (and possibly even the validation data), but the model will perform poorly in production.

当您的训练数据包含有关目标的信息时,就会发生数据泄漏(或泄漏),但当模型用于预测时,类似的数据将不可用。 这会导致训练集(甚至可能是验证数据)上的高性能,但模型在生产中表现不佳。

In other words, leakage causes a model to look accurate until you start making decisions with the model, and then the model becomes very inaccurate.

换句话说,泄漏会导致模型看起来很准确,直到您开始使用模型做出决策,然后模型就会变得非常不准确。

There are two main types of leakage: target leakage and train-test contamination.

泄漏主要有两种类型:目标泄漏和训练测试污染。

Target leakage

目标泄漏

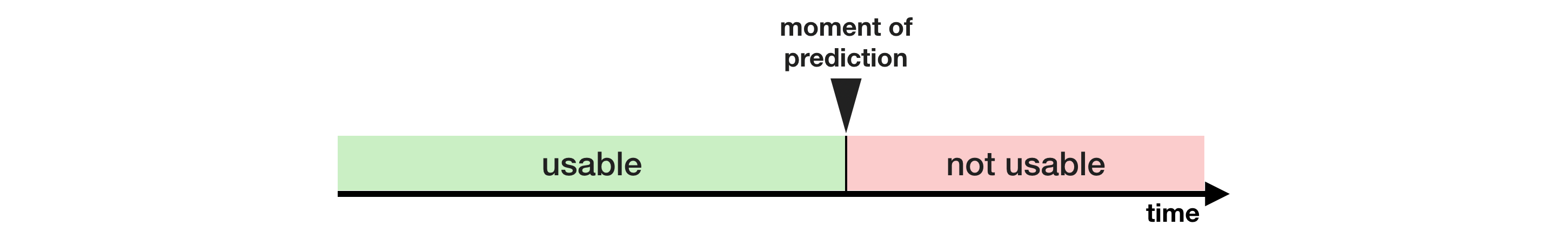

Target leakage occurs when your predictors include data that will not be available at the time you make predictions. It is important to think about target leakage in terms of the timing or chronological order that data becomes available, not merely whether a feature helps make good predictions.

当您的预测变量包含在您做出预测时不可用的数据时,就会发生目标泄漏。 重要的是要根据数据可用的时间或时间顺序来考虑目标泄漏,而不仅仅是某个功能是否有助于做出良好的预测。

An example will be helpful. Imagine you want to predict who will get sick with pneumonia. The top few rows of your raw data look like this:

一个例子会有所帮助。 想象一下,您想要预测谁会患上肺炎。 原始数据的前几行如下所示:

| got_pneumonia | age | weight | male | took_antibiotic_medicine | ... |

|---|---|---|---|---|---|

| False | 65 | 100 | False | False | ... |

| False | 72 | 130 | True | False | ... |

| True | 58 | 100 | False | True | ... |

People take antibiotic medicines after getting pneumonia in order to recover. The raw data shows a strong relationship between those columns, but took_antibiotic_medicine is frequently changed after the value for got_pneumonia is determined. This is target leakage.

人们在患肺炎后会服用抗生素药物以求康复。 原始数据显示这些列之间存在很强的关系,但在确定got_pneumonia的值之后,took_antibiotic_medicine经常发生更改。 这就是目标泄漏。

The model would see that anyone who has a value of False for took_antibiotic_medicine didn't have pneumonia. Since validation data comes from the same source as training data, the pattern will repeat itself in validation, and the model will have great validation (or cross-validation) scores.

该模型将发现任何took_antibiotic_medicine值为False的人都没有患有肺炎。 由于验证数据与训练数据来自同一来源,因此该模式将在验证中重复,并且模型将具有很高的验证(或交叉验证)分数。

But the model will be very inaccurate when subsequently deployed in the real world, because even patients who will get pneumonia won't have received antibiotics yet when we need to make predictions about their future health.

但当随后在现实世界中部署时,该模型将非常不准确,因为当我们需要预测他们未来的健康状况时,即使是患有肺炎的患者也不会接受抗生素治疗。

To prevent this type of data leakage, any variable updated (or created) after the target value is realized should be excluded.

为了防止这种类型的数据泄漏,应排除在实现目标值后更新(或创建)的任何变量。

也就是要防止

因果倒置。

Train-Test Contamination

训练测试污染

A different type of leak occurs when you aren't careful to distinguish training data from validation data.

当您不小心区分训练数据和验证数据时,就会发生另一种类型的泄漏。

Recall that validation is meant to be a measure of how the model does on data that it hasn't considered before. You can corrupt this process in subtle ways if the validation data affects the preprocessing behavior. This is sometimes called train-test contamination.

回想一下,验证旨在衡量模型如何处理之前未考虑过的数据。 如果验证数据影响预处理行为,您可能会以微妙的方式破坏此过程。 这有时称为训练测试污染。

For example, imagine you run preprocessing (like fitting an imputer for missing values) before calling train_test_split(). The end result? Your model may get good validation scores, giving you great confidence in it, but perform poorly when you deploy it to make decisions.

例如,假设您在调用 train_test_split() 之前运行预处理(例如为缺失值拟合输入器) 。 最终结果? 您的模型可能会获得良好的验证分数,让您对它充满信心,但在部署它来做出决策时却表现不佳。

After all, you incorporated data from the validation or test data into how you make predictions, so the may do well on that particular data even if it can't generalize to new data. This problem becomes even more subtle (and more dangerous) when you do more complex feature engineering.

毕竟,您将验证或测试数据中的数据合并到预测中,因此即使无法推广到新数据,也可能在该特定数据上表现良好。 当您进行更复杂的特征工程时,这个问题变得更加微妙(也更危险)。

If your validation is based on a simple train-test split, exclude the validation data from any type of fitting, including the fitting of preprocessing steps. This is easier if you use scikit-learn pipelines. When using cross-validation, it's even more critical that you do your preprocessing inside the pipeline!

如果您的验证基于简单的训练-测试分割,请从任何类型的拟合中排除验证数据,包括预处理步骤的拟合。 如果您使用 scikit-learn 管道,这会更容易。 使用交叉验证时,在管道内进行预处理更为重要!

Example

例子

In this example, you will learn one way to detect and remove target leakage.

在此示例中,您将学习一种检测和消除目标泄漏的方法。

We will use a dataset about credit card applications and skip the basic data set-up code. The end result is that information about each credit card application is stored in a DataFrame X. We'll use it to predict which applications were accepted in a Series y.

我们将使用有关信用卡申请的数据集并跳过基本数据设置代码。 最终结果是有关每个信用卡申请的信息都存储在 DataFrameX中。 我们将用它来预测y系列中哪些申请被接受。

import pandas as pd

# Read the data

data = pd.read_csv('../00 datasets/dansbecker/aer-credit-card-data/AER_credit_card_data.csv',

true_values = ['yes'], false_values = ['no'])

# Select target

y = data.card

# Select predictors

X = data.drop(['card'], axis=1)

print("Number of rows in the dataset:", X.shape[0])

X.head()Number of rows in the dataset: 1319| reports | age | income | share | expenditure | owner | selfemp | dependents | months | majorcards | active | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 37.66667 | 4.5200 | 0.033270 | 124.983300 | True | False | 3 | 54 | 1 | 12 |

| 1 | 0 | 33.25000 | 2.4200 | 0.005217 | 9.854167 | False | False | 3 | 34 | 1 | 13 |

| 2 | 0 | 33.66667 | 4.5000 | 0.004156 | 15.000000 | True | False | 4 | 58 | 1 | 5 |

| 3 | 0 | 30.50000 | 2.5400 | 0.065214 | 137.869200 | False | False | 0 | 25 | 1 | 7 |

| 4 | 0 | 32.16667 | 9.7867 | 0.067051 | 546.503300 | True | False | 2 | 64 | 1 | 5 |

Since this is a small dataset, we will use cross-validation to ensure accurate measures of model quality.

由于这是一个小数据集,我们将使用交叉验证来确保模型质量的准确测量。

from sklearn.pipeline import make_pipeline

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

# Since there is no preprocessing, we don't need a pipeline (used anyway as best practice!)

my_pipeline = make_pipeline(RandomForestClassifier(n_estimators=100))

cv_scores = cross_val_score(my_pipeline, X, y,

cv=5,

scoring='accuracy')

print("Cross-validation accuracy: %f" % cv_scores.mean())Cross-validation accuracy: 0.980292With experience, you'll find that it's very rare to find models that are accurate 98% of the time. It happens, but it's uncommon enough that we should inspect the data more closely for target leakage.

根据经验,您会发现很难找到准确率达到 98% 的模型。 这种情况确实发生过,但这种情况并不常见,因此我们应该更仔细地检查数据是否存在目标泄漏。

Here is a summary of the data, which you can also find under the data tab:

以下是数据摘要,您也可以在数据选项卡下找到:

card: 1 if credit card application accepted, 0 if not卡片:如果接受信用卡申请则为 1,如果不接受则为 0reports: Number of major derogatory reports报告:主要诽谤性报告的数量age: Age n years plus twelfths of a year年龄:年龄n岁加上一年的十二分之几income: Yearly income (divided by 10,000)收入:年收入(除以10,000)share: Ratio of monthly credit card expenditure to yearly income份额:每月信用卡支出与每年收入的比率expenditure: Average monthly credit card expenditure支出:平均每月信用卡支出owner: 1 if owns home, 0 if rents所有者:如果拥有房屋则为 1,如果租房则为 0selfempl: 1 if self-employed, 0 if not是否自雇:如果是自雇人士则为 1,否则为 0dependents: 1 + number of dependents家属:1 + 家属人数months: Months living at current address居住月份:在当前地址居住的月份majorcards: Number of major credit cards held主卡数:持有的主要信用卡数量active: Number of active credit accounts活跃:活跃信用账户数量

A few variables look suspicious. For example, does expenditure mean expenditure on this card or on cards used before applying?

一些变量看起来很可疑。 例如,支出是指这张卡上的支出还是申请前使用过的卡上的支出?

At this point, basic data comparisons can be very helpful:

此时,基本数据比较会非常有帮助:

expenditures_cardholders = X.expenditure[y]

expenditures_noncardholders = X.expenditure[~y]

print('Fraction of those who did not receive a card and had no expenditures: %.2f' \

%((expenditures_noncardholders == 0).mean()))

print('Fraction of those who received a card and had no expenditures: %.2f' \

%(( expenditures_cardholders == 0).mean()))Fraction of those who did not receive a card and had no expenditures: 1.00

Fraction of those who received a card and had no expenditures: 0.02As shown above, everyone who did not receive a card had no expenditures, while only 2% of those who received a card had no expenditures. It's not surprising that our model appeared to have a high accuracy. But this also seems to be a case of target leakage, where expenditures probably means expenditures on the card they applied for.

如上图所示,所有没有收到卡的人都没有支出,而只有2%的收到卡的人没有支出。 我们的模型似乎具有很高的准确性,这并不奇怪。 但这似乎也是一种目标泄漏的情况,其中支出可能意味着他们申请的卡上的支出。

Since share is partially determined by expenditure, it should be excluded too. The variables active and majorcards are a little less clear, but from the description, they sound concerning. In most situations, it's better to be safe than sorry if you can't track down the people who created the data to find out more.

由于 share 部分由 expenditure 决定,因此它也应该被排除在外。 变量 active 和 majorcards 不太清楚,但从描述来看,它们听起来令人担忧。 在大多数情况下,如果您无法追踪数据创建者以了解更多信息,那么安全总比后悔好。

We would run a model without target leakage as follows:

我们将运行一个没有目标泄漏的模型,如下所示:

# Drop leaky predictors from dataset

# 从数据集中删除泄露性的预测特征

potential_leaks = ['expenditure', 'share', 'active', 'majorcards']

X2 = X.drop(potential_leaks, axis=1)

# Evaluate the model with leaky predictors removed

# 评估删除了泄漏预测变量的模型

cv_scores = cross_val_score(my_pipeline, X2, y,

cv=5,

scoring='accuracy')

print("Cross-val accuracy: %f" % cv_scores.mean())Cross-val accuracy: 0.833964This accuracy is quite a bit lower, which might be disappointing. However, we can expect it to be right about 80% of the time when used on new applications, whereas the leaky model would likely do much worse than that (in spite of its higher apparent score in cross-validation).

这个准确度有些低,这可能会令人失望。 然而,我们可以预期,当在新应用程序中使用时,它的正确率约为 80%,而泄漏模型的表现可能会比这差得多(尽管其在交叉验证中的明显得分更高)。

Conclusion

结论

Data leakage can be multi-million dollar mistake in many data science applications. Careful separation of training and validation data can prevent train-test contamination, and pipelines can help implement this separation. Likewise, a combination of caution, common sense, and data exploration can help identify target leakage.

在许多数据科学应用中,数据泄漏可能会造成数百万美元的错误。 仔细分离训练和验证数据可以防止训练测试污染,而管道可以帮助实现这种分离。 同样,谨慎、常识和数据探索的结合可以帮助识别目标泄漏。

What's next?

下一步是什么?

This may still seem abstract. Try thinking through the examples in this exercise to develop your skill identifying target leakage and train-test contamination!

这可能看起来仍然很抽象。 尝试思考本练习中的示例,以培养识别目标泄漏和训练测试污染的技能!