import numpy as np

from itertools import product

def show_kernel(kernel, label=True, digits=None, text_size=28):

# Format kernel

kernel = np.array(kernel)

if digits is not None:

kernel = kernel.round(digits)

# Plot kernel

cmap = plt.get_cmap('Blues_r')

plt.imshow(kernel, cmap=cmap)

rows, cols = kernel.shape

thresh = (kernel.max()+kernel.min())/2

# Optionally, add value labels

if label:

for i, j in product(range(rows), range(cols)):

val = kernel[i, j]

color = cmap(0) if val > thresh else cmap(255)

plt.text(j, i, val,

color=color, size=text_size,

horizontalalignment='center', verticalalignment='center')

plt.xticks([])

plt.yticks([])Introduction

介绍

In the last lesson, we saw that a convolutional classifier has two parts: a convolutional base and a head of dense layers. We learned that the job of the base is to extract visual features from an image, which the head would then use to classify the image.

在上一课中,我们看到卷积分类器有两部分:卷积基础和密集层的头部。我们了解到,基座的工作是从图像中提取视觉特征,然后头部将使用这些特征对图像进行分类。

Over the next few lessons, we're going to learn about the two most important types of layers that you'll usually find in the base of a convolutional image classifier. These are the convolutional layer with ReLU activation, and the maximum pooling layer. In Lesson 5, you'll learn how to design your own convnet by composing these layers into blocks that perform the feature extraction.

在接下来的几节课程中,我们将学习您通常会在卷积图像分类器的基础中找到的两种最重要的层类型。这些是带有 ReLU 激活的 卷积层 和 最大池化层。在第 5 课中,您将学习如何通过将这些层组合成执行特征提取的块来设计自己的卷积网络。

This lesson is about the convolutional layer with its ReLU activation function.

本课介绍的是卷积层及其 ReLU 激活函数。

Feature Extraction

特征提取

Before we get into the details of convolution, let's discuss the purpose of these layers in the network. We're going to see how these three operations (convolution, ReLU, and maximum pooling) are used to implement the feature extraction process.

在我们深入了解卷积的细节之前,我们先讨论一下网络中这些层的用途。我们将了解如何使用这三种操作(卷积、ReLU 和最大池化)来实现特征提取过程。

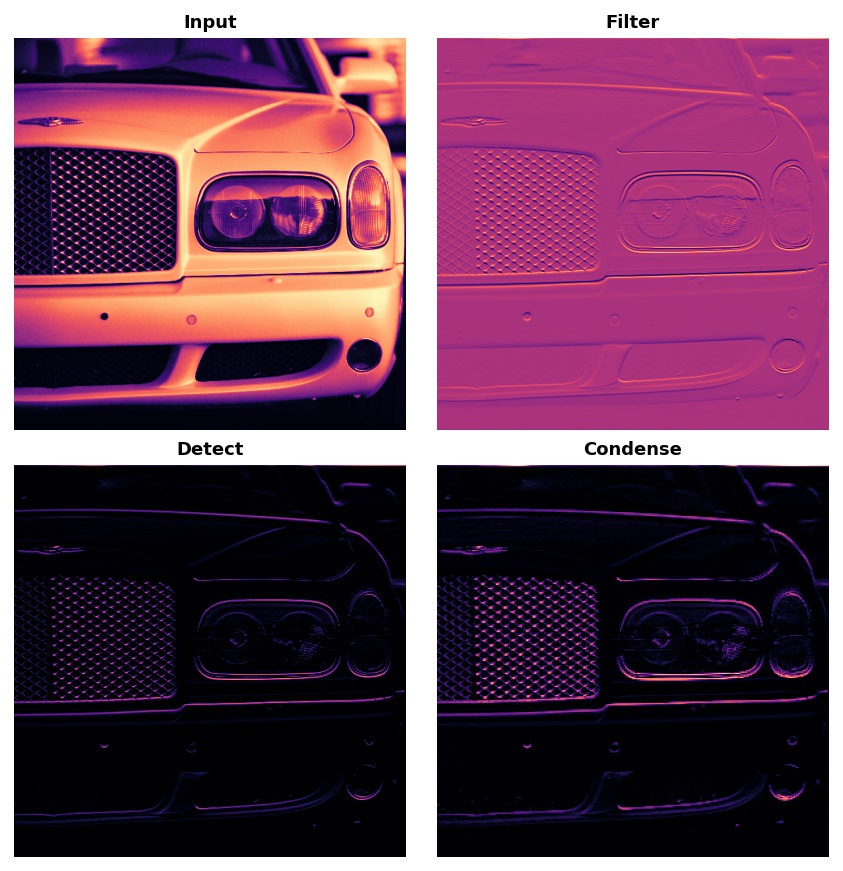

The feature extraction performed by the base consists of three basic operations:

基础执行的特征提取由三个基本操作组成:

- Filter an image for a particular feature (convolution)

- 过滤图像的特定特征(卷积)

- Detect that feature within the filtered image (ReLU)

- 检测过滤图像中的该特征(ReLU)

- Condense the image to enhance the features (maximum pooling)

- 压缩图像以增强特征(最大池化)

The next figure illustrates this process. You can see how these three operations are able to isolate some particular characteristic of the original image (in this case, horizontal lines).

下图说明了此过程。您可以看到这三个操作如何能够隔离原始图像的某些特定特征(在本例中为水平线)。

Typically, the network will perform several extractions in parallel on a single image. In modern convnets, it's not uncommon for the final layer in the base to be producing over 1000 unique visual features.

通常,网络将对单个图像并行执行多次提取。在现代惯例中,底座的最后一层产生 1000 多个独特的视觉特征并不罕见。

Filter with Convolution

卷积过滤

A convolutional layer carries out the filtering step. You might define a convolutional layer in a Keras model something like this:

卷积层执行过滤步骤。您可以在 Keras 模型中定义一个卷积层,如下所示:

from tensorflow import keras

from tensorflow.keras import layers

model = keras.Sequential([

layers.Conv2D(filters=64, kernel_size=3), # activation is None

# More layers follow

])2024-05-29 06:31:08.974320: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-05-29 06:31:08.974380: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-05-29 06:31:08.975848: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registeredWe can understand these parameters by looking at their relationship to the weights and activations of the layer. Let's do that now.

我们可以通过查看这些参数与层的权重和激活的关系来理解这些参数。我们现在就这样做吧。

Weights

权重

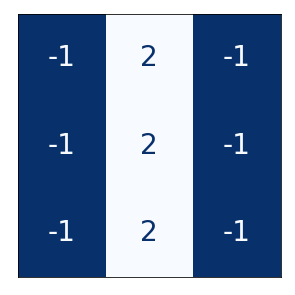

The weights a convnet learns during training are primarily contained in its convolutional layers. These weights we call kernels. We can represent them as small arrays:

卷积网络在训练期间学习的权重主要包含在其卷积层中。这些权重我们称为内核。我们可以将它们表示为小数组:

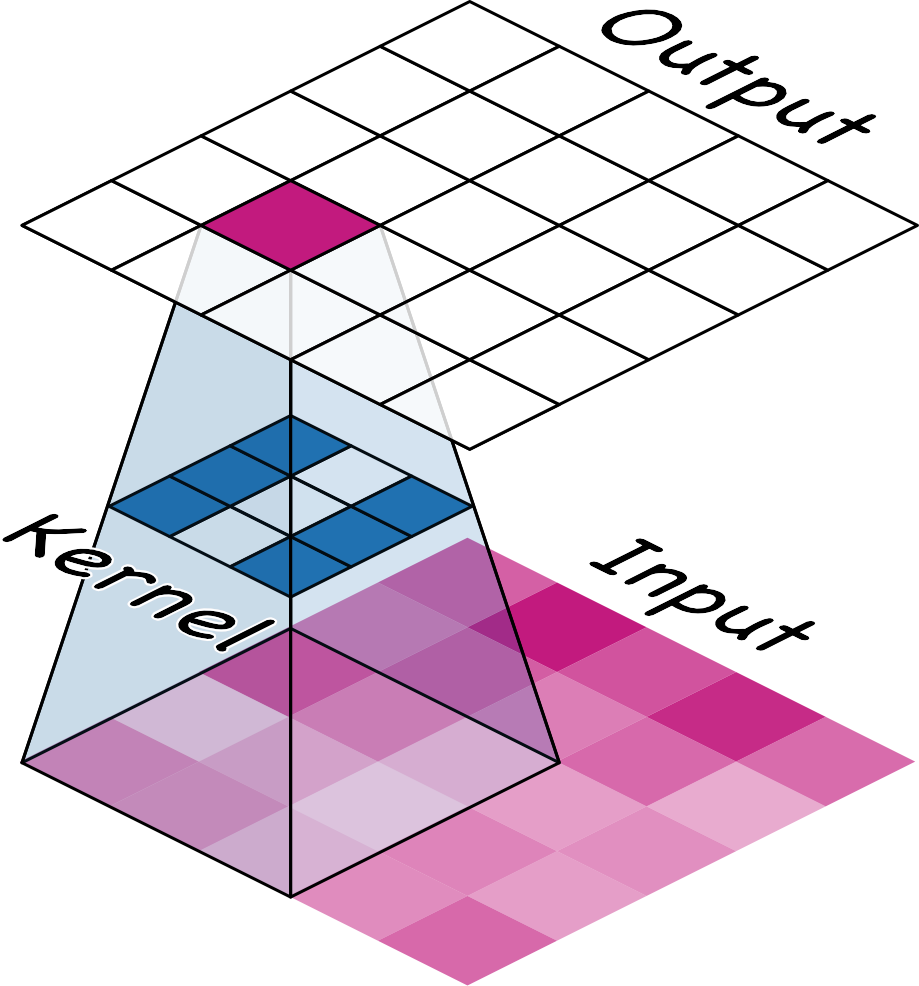

A kernel operates by scanning over an image and producing a weighted sum of pixel values. In this way, a kernel will act sort of like a polarized lens, emphasizing or deemphasizing certain patterns of information.

内核通过扫描图像并生成像素值的加权和来进行操作。通过这种方式,内核的作用有点像偏振透镜,强调或弱化某些信息模式。

Kernels define how a convolutional layer is connected to the layer that follows. The kernel above will connect each neuron in the output to nine neurons in the input. By setting the dimensions of the kernels with kernel_size, you are telling the convnet how to form these connections. Most often, a kernel will have odd-numbered dimensions -- like kernel_size=(3, 3) or (5, 5) -- so that a single pixel sits at the center, but this is not a requirement.

内核定义卷积层如何连接到后续层。上面的内核将输出中的每个神经元连接到输入中的九个神经元。通过使用kernel_size设置内核的尺寸,您可以告诉卷积网络如何形成这些连接。大多数情况下,内核将具有奇数维度——例如kernel_size=(3, 3)或(5, 5)——以便单个像素位于中心,但这不是必需的。

The kernels in a convolutional layer determine what kinds of features it creates. During training, a convnet tries to learn what features it needs to solve the classification problem. This means finding the best values for its kernels.

卷积层中的内核决定了它创建的特征类型。在训练过程中,卷积网络尝试了解解决分类问题所需的特征。这意味着为其内核找到最佳值。

Activations

激活

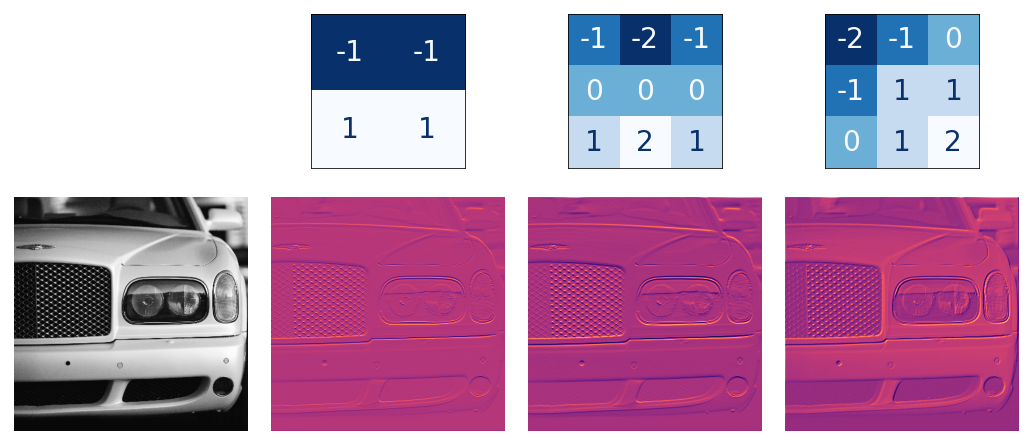

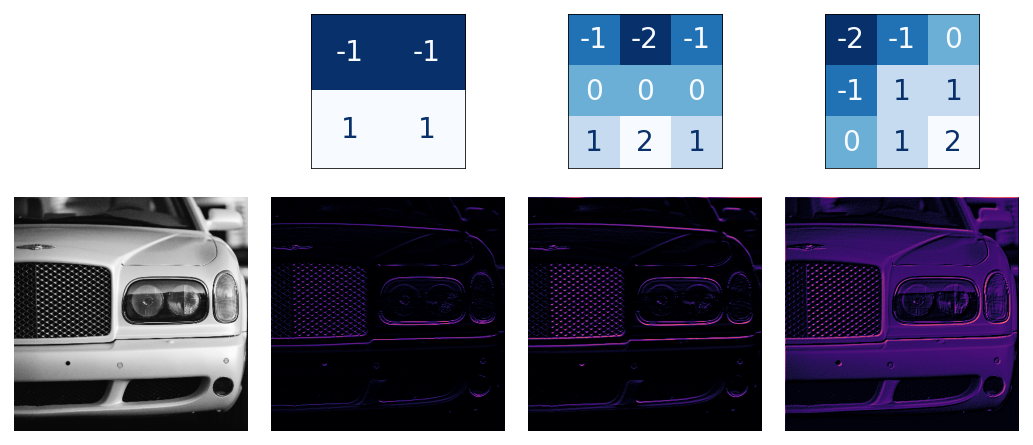

The activations in the network we call feature maps. They are what result when we apply a filter to an image; they contain the visual features the kernel extracts. Here are a few kernels pictured with feature maps they produced.

网络中的激活我们称之为特征图。它们是我们对图像应用滤镜时的结果;它们包含内核提取的视觉特征。以下是一些内核及其生成的特征图。

From the pattern of numbers in the kernel, you can tell the kinds of feature maps it creates. Generally, what a convolution accentuates in its inputs will match the shape of the positive numbers in the kernel. The left and middle kernels above will both filter for horizontal shapes.

从内核中的数字模式,您可以判断它创建的特征图类型。通常,卷积在其输入中强调的内容将与内核中 正 数字的形状相匹配。上方左侧和中间的内核都将过滤水平形状。

With the filters parameter, you tell the convolutional layer how many feature maps you want it to create as output.

使用 filters 参数,您可以告诉卷积层您希望它创建多少个特征图作为输出。

Detect with ReLU

使用 ReLU 进行检测

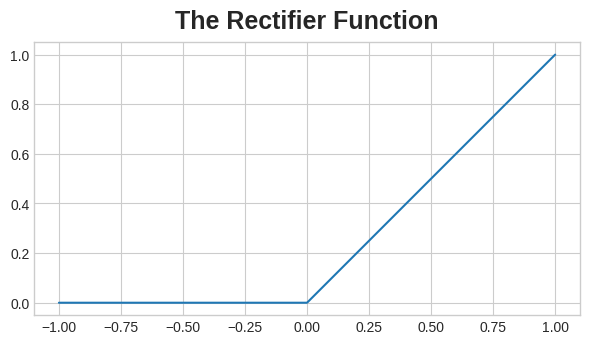

After filtering, the feature maps pass through the activation function. The rectifier function has a graph like this:

经过过滤后,特征图会经过激活函数。整流函数 的图如下:

整流为 0。A neuron with a rectifier attached is called a rectified linear unit. For that reason, we might also call the rectifier function the ReLU activation or even the ReLU function.

附有整流器的神经元称为整流线性单元。因此,我们也可能将整流器函数称为ReLU 激活,甚至称为 ReLU 函数。

The ReLU activation can be defined in its own Activation layer, but most often you'll just include it as the activation function of Conv2D.

ReLU 激活可以在其自己的激活层中定义,但大多数情况下,您只会将其作为Conv2D的激活函数。

model = keras.Sequential([

layers.Conv2D(filters=64, kernel_size=3, activation='relu')

# More layers follow

])You could think about the activation function as scoring pixel values according to some measure of importance. The ReLU activation says that negative values are not important and so sets them to 0. ("Everything unimportant is equally unimportant.")

您可以将激活函数视为根据某种重要性度量对像素值进行评分。ReLU 激活表示负值不重要,因此将其设置为 0。(所有不重要的事物都同样不重要。)

Here is ReLU applied the feature maps above. Notice how it succeeds at isolating the features.

这是 ReLU 应用上述特征图。请注意它如何成功隔离特征。

Like other activation functions, the ReLU function is nonlinear. Essentially this means that the total effect of all the layers in the network becomes different than what you would get by just adding the effects together -- which would be the same as what you could achieve with only a single layer. The nonlinearity ensures features will combine in interesting ways as they move deeper into the network. (We'll explore this "feature compounding" more in Lesson 5.)

与其他激活函数一样,ReLU 函数是非线性的。本质上,这意味着网络中所有层的总效果与仅将效果加在一起所获得的效果不同——这与仅使用单个层所能实现的效果相同。非线性确保特征在深入网络时以有趣的方式组合。(我们将在第 5 课中进一步探讨这种特征复合。)

Example - Apply Convolution and ReLU

示例 - 应用卷积和 ReLU

We'll do the extraction ourselves in this example to understand better what convolutional networks are doing "behind the scenes".

我们将在此示例中自己进行提取,以更好地了解卷积网络在幕后做什么。

Here is the image we'll use for this example:

这是我们将在此示例中使用的图像:

import tensorflow as tf

import matplotlib.pyplot as plt

plt.rc('figure', autolayout=True)

plt.rc('axes', labelweight='bold', labelsize='large',

titleweight='bold', titlesize=18, titlepad=10)

plt.rc('image', cmap='magma')

image_path = '../input/computer-vision-resources/car_feature.jpg'

image = tf.io.read_file(image_path)

image = tf.io.decode_jpeg(image)

plt.figure(figsize=(6, 6))

plt.imshow(tf.squeeze(image), cmap='gray')

plt.axis('off')

plt.show();

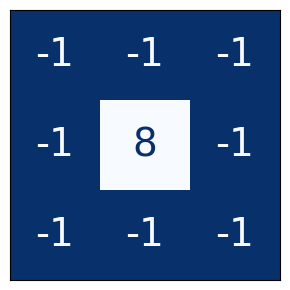

For the filtering step, we'll define a kernel and then apply it with the convolution. The kernel in this case is an "edge detection" kernel. You can define it with tf.constant just like you'd define an array in Numpy with np.array. This creates a tensor of the sort TensorFlow uses.

对于过滤步骤,我们将定义一个内核,然后将其应用于卷积。本例中的内核是边缘检测内核。您可以使用tf.constant定义它,就像使用np.array在 Numpy 中定义数组一样。这会创建一个 TensorFlow 使用的 张量。

import tensorflow as tf

kernel = tf.constant([

[-1, -1, -1],

[-1, 8, -1],

[-1, -1, -1],

])

plt.figure(figsize=(3, 3))

show_kernel(kernel)

TensorFlow includes many common operations performed by neural networks in its tf.nn module. The two that we'll use are conv2d and relu. These are simply function versions of Keras layers.

TensorFlow 在其 tf.nn 模块 中包含了许多神经网络执行的常见操作。我们将使用 conv2d 和 relu。它们只是 Keras 层的函数版本。

This next hidden cell does some reformatting to make things compatible with TensorFlow. The details aren't important for this example.

下一个隐藏单元进行了一些重新格式化,以使其与 TensorFlow 兼容。细节对于此示例并不重要。

# Reformat for batch compatibility.

image = tf.image.convert_image_dtype(image, dtype=tf.float32)

image = tf.expand_dims(image, axis=0)

kernel = tf.reshape(kernel, [*kernel.shape, 1, 1])

kernel = tf.cast(kernel, dtype=tf.float32)Now let's apply our kernel and see what happens.

现在让我们应用内核并看看会发生什么。

image_filter = tf.nn.conv2d(

input=image,

filters=kernel,

# we'll talk about these two in lesson 4!

strides=1,

padding='SAME',

)

plt.figure(figsize=(6, 6))

plt.imshow(tf.squeeze(image_filter))

plt.axis('off')

plt.show();

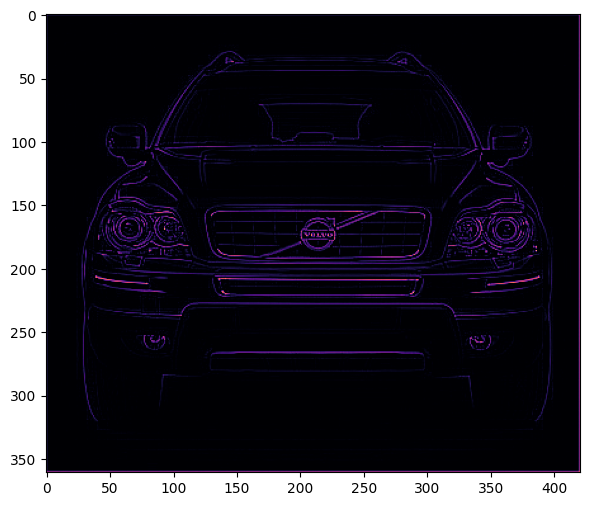

Next is the detection step with the ReLU function. This function is much simpler than the convolution, as it doesn't have any parameters to set.

接下来是使用 ReLU 函数的检测步骤。此函数比卷积简单得多,因为它没有任何要设置的参数。

image_detect = tf.nn.relu(image_filter)

plt.figure(figsize=(6, 6))

plt.imshow(tf.squeeze(image_detect))

# plt.axis('off')

plt.show();

And now we've created a feature map! Images like these are what the head uses to solve its classification problem. We can imagine that certain features might be more characteristic of Cars and others more characteristic of Trucks. The task of a convnet during training is to create kernels that can find those features.

现在我们已经创建了一个特征图!像这样的图像是头部用来解决分类问题的方法。我们可以想象,某些特征可能更符合汽车的特征,而其他特征则更符合卡车的特征。卷积网络在训练期间的任务是创建可以找到这些特征的内核。

Conclusion

结论

We saw in this lesson the first two steps a convnet uses to perform feature extraction: filter with Conv2D layers and detect with relu activation.

我们在本课中看到了卷积网络用于执行特征提取的前两个步骤:使用 Conv2D 层进行过滤,使用 relu 激活进行检测。

Your Turn

轮到你了

In the exercises, you'll have a chance to experiment with the kernels in the pretrained VGG16 model we used in Lesson 1.

在练习 中,你将有机会尝试使用我们在第 1 课中使用的预训练 VGG16 模型中的内核。