Introduction

介绍

In this lesson we're going to see how we can build neural networks capable of learning the complex kinds of relationships deep neural nets are famous for.

在本课中,我们将了解如何构建著名的能够学习复杂关系的深度神经网络。

The key idea here is modularity, building up a complex network from simpler functional units. We've seen how a linear unit computes a linear function -- now we'll see how to combine and modify these single units to model more complex relationships.

这里的关键思想是模块化,即从更简单的功能单元构建复杂的网络。 我们已经了解了线性单元如何计算线性函数——现在我们将了解如何组合和修改这些单个单元以建模更复杂的关系。

Layers

层数

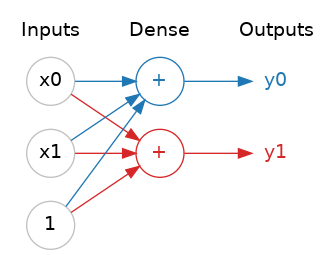

Neural networks typically organize their neurons into layers. When we collect together linear units having a common set of inputs we get a dense layer.

神经网络通常将其神经元组织成层。 当我们将具有一组公共输入的线性单元收集在一起时,我们得到一个密集层。

You could think of each layer in a neural network as performing some kind of relatively simple transformation. Through a deep stack of layers, a neural network can transform its inputs in more and more complex ways. In a well-trained neural network, each layer is a transformation getting us a little bit closer to a solution.

您可以将神经网络中的每一层视为执行某种相对简单的转换。 通过深层堆栈,神经网络可以以越来越复杂的方式转换其输入。 在训练有素的神经网络中,每一层都是一次转换,让我们更接近解决方案。

Many Kinds of Layers

A "layer" in Keras is a very general kind of thing. A layer can be, essentially, any kind of data transformation. Many layers, like the convolutional and recurrent layers, transform data through use of neurons and differ primarily in the pattern of connections they form. Others though are used for feature engineering or just simple arithmetic. There's a whole world of layers to discover -- check them out!多种图层

Keras 中的“层”是一种非常通用的东西。 本质上,层可以是任何类型的数据转换。 许多层,例如卷积和[循环层](https://www. tensorflow.org/api_docs/python/tf/keras/layers/RNN),通过使用神经元转换数据,主要区别在于它们形成的连接模式。 其他人则用于特征工程或只是[简单算术](https:// www.tensorflow.org/api_docs/python/tf/keras/layers/Add)。 有一个完整的层世界等待您去发现——查看它们!

The Activation Function

激活函数

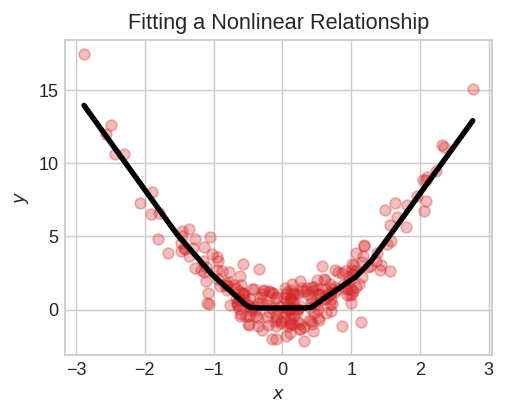

It turns out, however, that two dense layers with nothing in between are no better than a single dense layer by itself. Dense layers by themselves can never move us out of the world of lines and planes. What we need is something nonlinear. What we need are activation functions.

然而事实证明,两个中间没有任何东西的Dense层并不比单个Dense层本身更好。 Dense的层次本身永远无法让我们脱离线和面的世界。 我们需要的是非线性的东西。 我们需要的是激活函数。

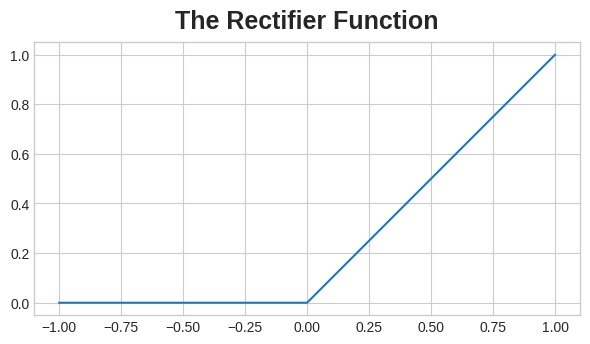

An activation function is simply some function we apply to each of a layer's outputs (its activations). The most common is the rectifier function $max(0, x)$.

The rectifier function has a graph that's a line with the negative part "rectified" to zero. Applying the function to the outputs of a neuron will put a bend in the data, moving us away from simple lines.

整流器函数有一个图形,该图形是一条线,其中负部分整流为零。 将函数应用于神经元的输出将使数据发生弯曲,使我们远离简单的线条。

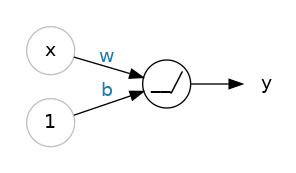

When we attach the rectifier to a linear unit, we get a rectified linear unit or ReLU. (For this reason, it's common to call the rectifier function the "ReLU function".) Applying a ReLU activation to a linear unit means the output becomes max(0, w * x + b), which we might draw in a diagram like:

当我们将整流器连接到线性单元时,我们得到一个整流线性单元或ReLU。 (因此,通常将整流器函数称为ReLU 函数。)将 ReLU 激活应用于线性单元意味着输出变为max(0, w * x + b),我们可以将其绘制为 图表如下:

Stacking Dense Layers

堆叠密集层

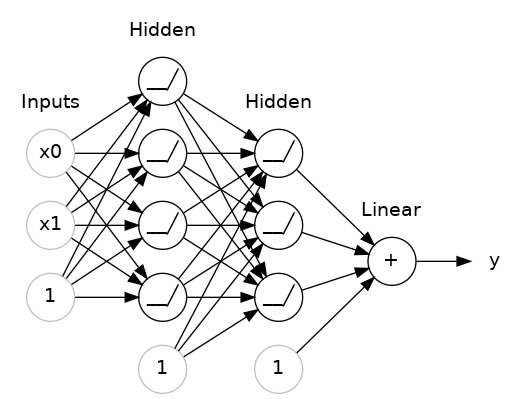

Now that we have some nonlinearity, let's see how we can stack layers to get complex data transformations.

现在我们已经有了一些非线性,让我们看看如何堆叠层来获得复杂的数据转换。

The layers before the output layer are sometimes called hidden since we never see their outputs directly.

输出层之前的层有时被称为隐藏层,因为我们永远不会直接看到它们的输出。

Now, notice that the final (output) layer is a linear unit (meaning, no activation function). That makes this network appropriate to a regression task, where we are trying to predict some arbitrary numeric value. Other tasks (like classification) might require an activation function on the output.

现在,请注意最后(输出)层是线性单元(意味着没有激活函数)。 这使得这个网络适合回归任务,我们试图预测一些任意数值。 其他任务(例如分类)可能需要输出上的激活函数。

Building Sequential Models

构建序列模型

The Sequential model we've been using will connect together a list of layers in order from first to last: the first layer gets the input, the last layer produces the output. This creates the model in the figure above:

我们一直使用的顺序模型将按从第一个到最后一个的顺序将层列表连接在一起:第一层获取输入,最后一层生成输出。 这将创建上图中的模型:

from tensorflow import keras

from tensorflow.keras import layers

model = keras.Sequential([

# the hidden ReLU layers

# 隐藏的ReLU层

layers.Dense(units=4, activation='relu', input_shape=[2]),

layers.Dense(units=3, activation='relu'),

# the linear output layer

# 线性输出层

layers.Dense(units=1),

])2024-02-14 17:39:43.993612: I external/local_tsl/tsl/cuda/cudart_stub.cc:31] Could not find cuda drivers on your machine, GPU will not be used.

2024-02-14 17:39:45.090019: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-02-14 17:39:45.090140: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-02-14 17:39:45.339889: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2024-02-14 17:39:45.696609: I external/local_tsl/tsl/cuda/cudart_stub.cc:31] Could not find cuda drivers on your machine, GPU will not be used.

2024-02-14 17:39:45.702022: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-02-14 17:39:49.094725: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRTBe sure to pass all the layers together in a list, like [layer, layer, layer, ...], instead of as separate arguments. To add an activation function to a layer, just give its name in the activation argument.

确保将所有层一起传递到一个列表中,例如[layer,layer,layer,...],而不是作为单独的参数。 要将激活函数添加到层,只需在activation参数中给出其名称即可。

Your Turn

到你了

Now, create a deep neural network for the Concrete dataset.

现在,为 Concrete 数据集创建一个深度神经网络。