Introduction

介绍

Now that you've seen the layers a convnet uses to extract features, it's time to put them together and build a network of your own!

现在您已经了解了卷积网络用于提取特征的各层,是时候将它们组合在一起并构建自己的网络了!

Simple to Refined

从简单到精细

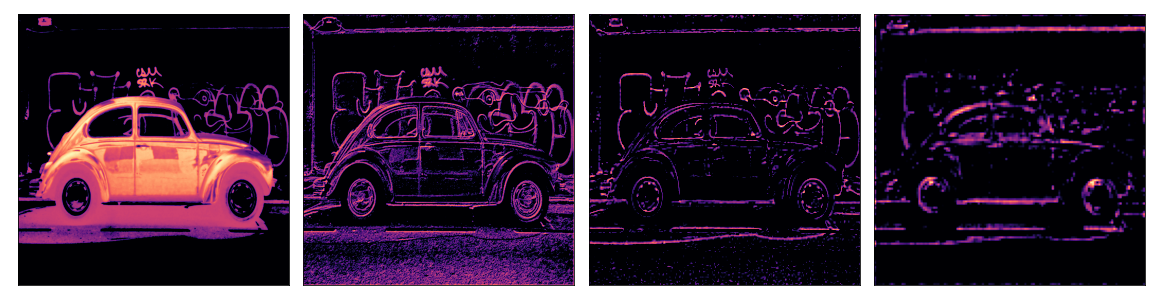

In the last three lessons, we saw how convolutional networks perform feature extraction through three operations: filter, detect, and condense. A single round of feature extraction can only extract relatively simple features from an image, things like simple lines or contrasts. These are too simple to solve most classification problems. Instead, convnets will repeat this extraction over and over, so that the features become more complex and refined as they travel deeper into the network.

在过去的三节课中,我们了解了卷积网络如何通过三个操作执行特征提取:过滤、检测和压缩。单轮特征提取只能从图像中提取相对简单的特征,例如简单的线条或对比度。这些特征太简单了,无法解决大多数分类问题。相反,卷积网络会一遍又一遍地重复这种提取,这样随着特征在网络中越走越深,它们就会变得更加复杂和精细。

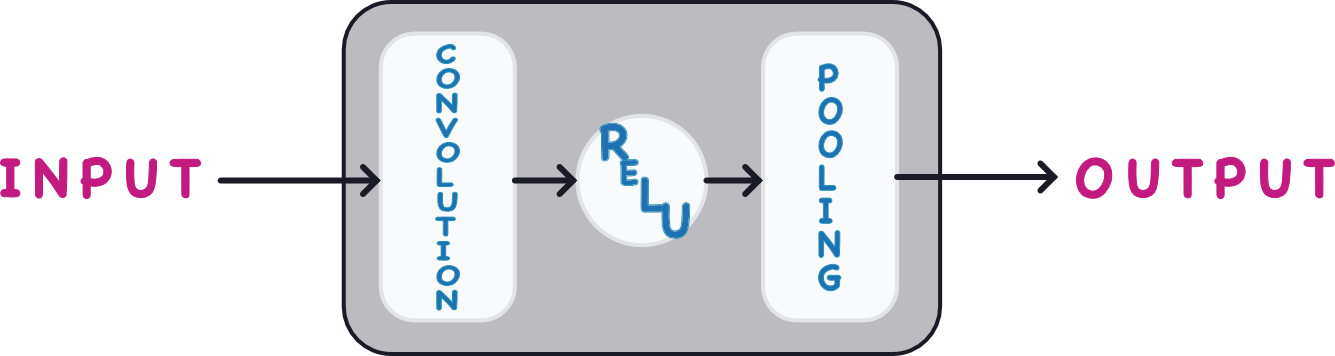

Convolutional Blocks

卷积块

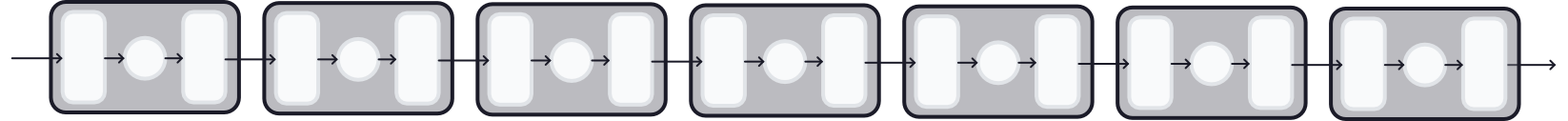

It does this by passing them through long chains of convolutional blocks which perform this extraction.

它通过将它们传递到执行此提取的长链卷积块来实现这一点。

These convolutional blocks are stacks of Conv2D and MaxPool2D layers, whose role in feature extraction we learned about in the last few lessons.

这些卷积块是Conv2D和MaxPool2D层的堆叠,我们在前几节课中了解了它们在特征提取中的作用。

Each block represents a round of extraction, and by composing these blocks the convnet can combine and recombine the features produced, growing them and shaping them to better fit the problem at hand. The deep structure of modern convnets is what allows this sophisticated feature engineering and has been largely responsible for their superior performance.

每个块代表一轮提取,通过组合这些块,卷积网络可以组合和重新组合生成的特征,使其增长并塑造成更适合手头的问题。现代卷积网络的深层结构使这种复杂的特征工程成为可能,并且在很大程度上决定了它们的卓越性能。

Example - Design a Convnet

示例 - 设计卷积网络

Let's see how to define a deep convolutional network capable of engineering complex features. In this example, we'll create a Keras Sequence model and then train it on our Cars dataset.

让我们看看如何定义能够设计复杂特征的深度卷积网络。在此示例中,我们将创建一个 Keras Sequence 模型,然后在我们的 Cars 数据集上对其进行训练。

Step 1 - Load Data

步骤 1 - 加载数据

This hidden cell loads the data.

此隐藏单元加载数据。

# Imports

import os, warnings

import matplotlib.pyplot as plt

from matplotlib import gridspec

import numpy as np

import tensorflow as tf

from tensorflow.keras.preprocessing import image_dataset_from_directory

# Reproducability

def set_seed(seed=31415):

np.random.seed(seed)

tf.random.set_seed(seed)

os.environ['PYTHONHASHSEED'] = str(seed)

os.environ['TF_DETERMINISTIC_OPS'] = '1'

set_seed()

# Set Matplotlib defaults

plt.rc('figure', autolayout=True)

plt.rc('axes', labelweight='bold', labelsize='large',

titleweight='bold', titlesize=18, titlepad=10)

plt.rc('image', cmap='magma')

warnings.filterwarnings("ignore") # to clean up output cells

# Load training and validation sets

ds_train_ = image_dataset_from_directory(

'../input/car-or-truck/train',

labels='inferred',

label_mode='binary',

image_size=[128, 128],

interpolation='nearest',

batch_size=64,

shuffle=True,

)

ds_valid_ = image_dataset_from_directory(

'../input/car-or-truck/valid',

labels='inferred',

label_mode='binary',

image_size=[128, 128],

interpolation='nearest',

batch_size=64,

shuffle=False,

)

# Data Pipeline

def convert_to_float(image, label):

image = tf.image.convert_image_dtype(image, dtype=tf.float32)

return image, label

AUTOTUNE = tf.data.experimental.AUTOTUNE

ds_train = (

ds_train_

.map(convert_to_float)

.cache()

.prefetch(buffer_size=AUTOTUNE)

)

ds_valid = (

ds_valid_

.map(convert_to_float)

.cache()

.prefetch(buffer_size=AUTOTUNE)

)

2024-08-28 01:34:27.233605: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-08-28 01:34:27.233668: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-08-28 01:34:27.235210: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Found 5117 files belonging to 2 classes.

Found 5051 files belonging to 2 classes.Step 2 - Define Model

第 2 步 - 定义模型

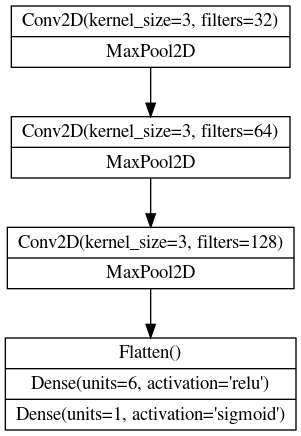

Here is a diagram of the model we'll use:

以下是我们将使用的模型图:

Now we'll define the model. See how our model consists of three blocks of Conv2D and MaxPool2D layers (the base) followed by a head of Dense layers. We can translate this diagram more or less directly into a Keras Sequential model just by filling in the appropriate parameters.

现在我们将定义模型。看看我们的模型如何由三个Conv2D和MaxPool2D层(基础)块组成,后面跟着Dense层。我们只需填写适当的参数,就可以将此图或多或少直接转换为 KerasSequential模型。

from tensorflow import keras

from tensorflow.keras import layers

model = keras.Sequential([

# First Convolutional Block

layers.Conv2D(filters=32, kernel_size=5, activation="relu", padding='same',

# give the input dimensions in the first layer

# [height, width, color channels(RGB)]

input_shape=[128, 128, 3]),

layers.MaxPool2D(),

# Second Convolutional Block

layers.Conv2D(filters=64, kernel_size=3, activation="relu", padding='same'),

layers.MaxPool2D(),

# Third Convolutional Block

layers.Conv2D(filters=128, kernel_size=3, activation="relu", padding='same'),

layers.MaxPool2D(),

# Classifier Head

layers.Flatten(),

layers.Dense(units=6, activation="relu"),

layers.Dense(units=1, activation="sigmoid"),

])

model.summary()Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 128, 128, 32) 2432

max_pooling2d (MaxPooling2 (None, 64, 64, 32) 0

D)

conv2d_1 (Conv2D) (None, 64, 64, 64) 18496

max_pooling2d_1 (MaxPoolin (None, 32, 32, 64) 0

g2D)

conv2d_2 (Conv2D) (None, 32, 32, 128) 73856

max_pooling2d_2 (MaxPoolin (None, 16, 16, 128) 0

g2D)

flatten (Flatten) (None, 32768) 0

dense (Dense) (None, 6) 196614

dense_1 (Dense) (None, 1) 7

=================================================================

Total params: 291405 (1.11 MB)

Trainable params: 291405 (1.11 MB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________Notice in this definition is how the number of filters doubled block-by-block: 32, 64, 128. This is a common pattern. Since the MaxPool2D layer is reducing the size of the feature maps, we can afford to increase the quantity we create.

请注意,此定义中过滤器的数量逐块增加一倍:32、64、128。这是一种常见模式。由于 MaxPool2D 层正在减小特征图的 大小,因此我们可以增加创建的 数量。

Step 3 - Train

步骤 3 - 训练

We can train this model just like the model from Lesson 1: compile it with an optimizer along with a loss and metric appropriate for binary classification.

我们可以像训练第 1 课中的模型一样训练此模型:使用优化器以及适合二元分类的损失和指标对其进行编译。

model.compile(

optimizer=tf.keras.optimizers.Adam(epsilon=0.01),

loss='binary_crossentropy',

metrics=['binary_accuracy']

)

history = model.fit(

ds_train,

validation_data=ds_valid,

epochs=40,

verbose=0,

)

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1724808878.891870 962 device_compiler.h:186] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process.import pandas as pd

history_frame = pd.DataFrame(history.history)

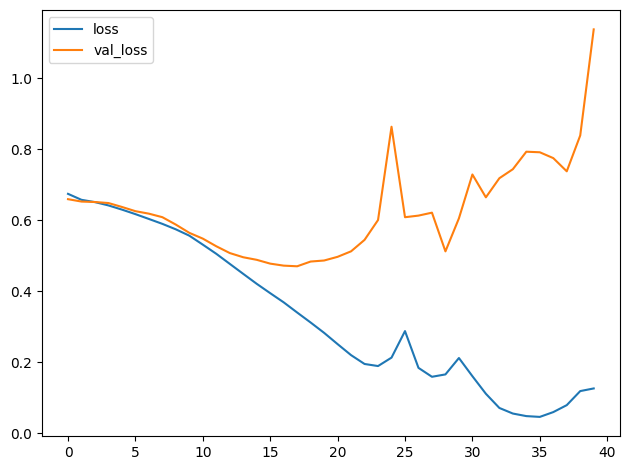

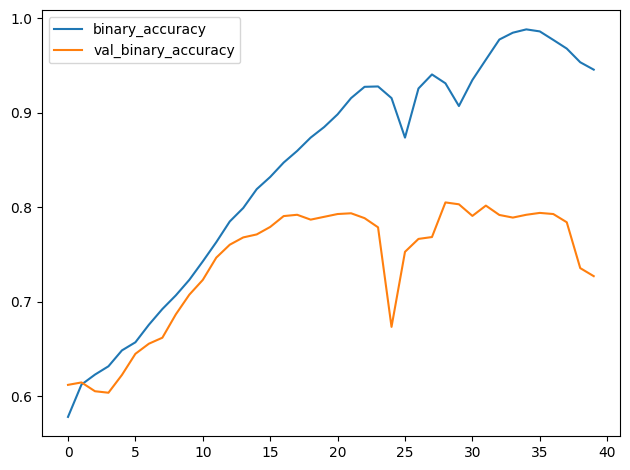

history_frame.loc[:, ['loss', 'val_loss']].plot()

history_frame.loc[:, ['binary_accuracy', 'val_binary_accuracy']].plot();

This model is much smaller than the VGG16 model from Lesson 1 -- only 3 convolutional layers versus the 16 of VGG16. It was nevertheless able to fit this dataset fairly well. We might still be able to improve this simple model by adding more convolutional layers, hoping to create features better adapted to the dataset. This is what we'll try in the exercises.

这个模型比第 1 课中的 VGG16 模型小得多——只有 3 个卷积层,而 VGG16 有 16 个。但它仍然能够很好地适应这个数据集。我们可能仍然能够通过添加更多卷积层来改进这个简单的模型,希望创建更适合数据集的特征。这就是我们将在练习中尝试的。

Conclusion

结论

In this tutorial, you saw how to build a custom convnet composed of many convolutional blocks and capable of complex feature engineering.

在本教程中,您了解了如何构建由许多 卷积块 组成且能够进行复杂特征工程的自定义卷积网络。

Your Turn

轮到你了

In the exercises, you'll create a convnet that performs as well on this problem as VGG16 does -- without pretraining! Try it now!

在练习中,您将创建一个在这个问题上表现与 VGG16 一样好的卷积网络——无需预训练!立即尝试!